6 technologies behind AI

In a nutshell, AI mimics human intelligence or behaviour. However, when does something go from being a Fax machine to being Samantha from Her or HAL from 2001: A Space Odyssey? Where do we draw the line?

Add intelligence and behaviour to the mix and things get even more complicated. Does it matter if a machine can only exhibit a narrow range of behaviours? Therein lies the rub. The line between computer programs and AI systems is opaque.

In this article, we will explore one way of demystifying AI technologies and drawing (slightly) more concrete lines of separation.

What is Artificial Intelligence?

We can categorise AI technologies in several ways, including:

- Its capacity to mimic human characteristics.

- The technologies enabling human characteristics to be mimicked.

- The real-world applications of the system.

- Theory of Mind.

Each one of these can help us make more sense of a crowded and confusing landscape.

In a previous article, we explored how we can categorise AI technologies by their intelligence. Using this method we uncovered 3 types of AI. These are Artificial Narrow Intelligence (ANI), Artificial General Intelligence (AGI) and Artificial Super Intelligence (ASI).

The problem is, AGI and ASI remain (stubbornly) Science Fiction. Even optimistic estimates place AGI emergence around 2050. This is a problem because all existing AI are lumped into a single category, despite massive differences in capability and purpose.

In this article, we will explore AI technologies in terms of the technologies powering these systems. Using this method, there are 6 categories of AI.

The 6 technologies behind AI: AI technology.

Type #1: Expert systems

Expert systems are the quintessential symbolic AI technologies.

Symbolic means information is stored and transferred in symbolic containers, or entities, rather than as raw data.

Imagine a human anatomy database including these symbolic entities: head, eyes and brain. Alone, these symbols are meaningless. However, we can add considerable meaning to our database with a couple of associations.

Head contains("brain")

Head contains("eyes")

Eyes push_data("brain")

This is how expert systems work. Though lists of ifs, thens and associations written in human-like language. Back to our example.

Imagine if our database from above was an expert system we could use during a human anatomy exam we had forgotten to study for. Oops. We could ask questions like "do heads contain eyes" and receive answers. However, if we asked questions like "how many eyes do heads contain", our database and AI would be no help. This is a serious limitation.

Obviously, an expert system containing enough data points and data associations has many uses, especially in fields where other forms of AI are unavailable, use cases are simple or when human experts are readily available for clarification. However, for many people, machine learning and Natural Language Processing (NLP) embody the be all and end all attributes of real AI.

Type #2: Machine learning

Machine learning covers a range of statistical techniques giving computers the ability to learn. That is, they progressively improve their capacity to execute a task.

There are more than a dozen of these statistical techniques, one of which is deep learning.

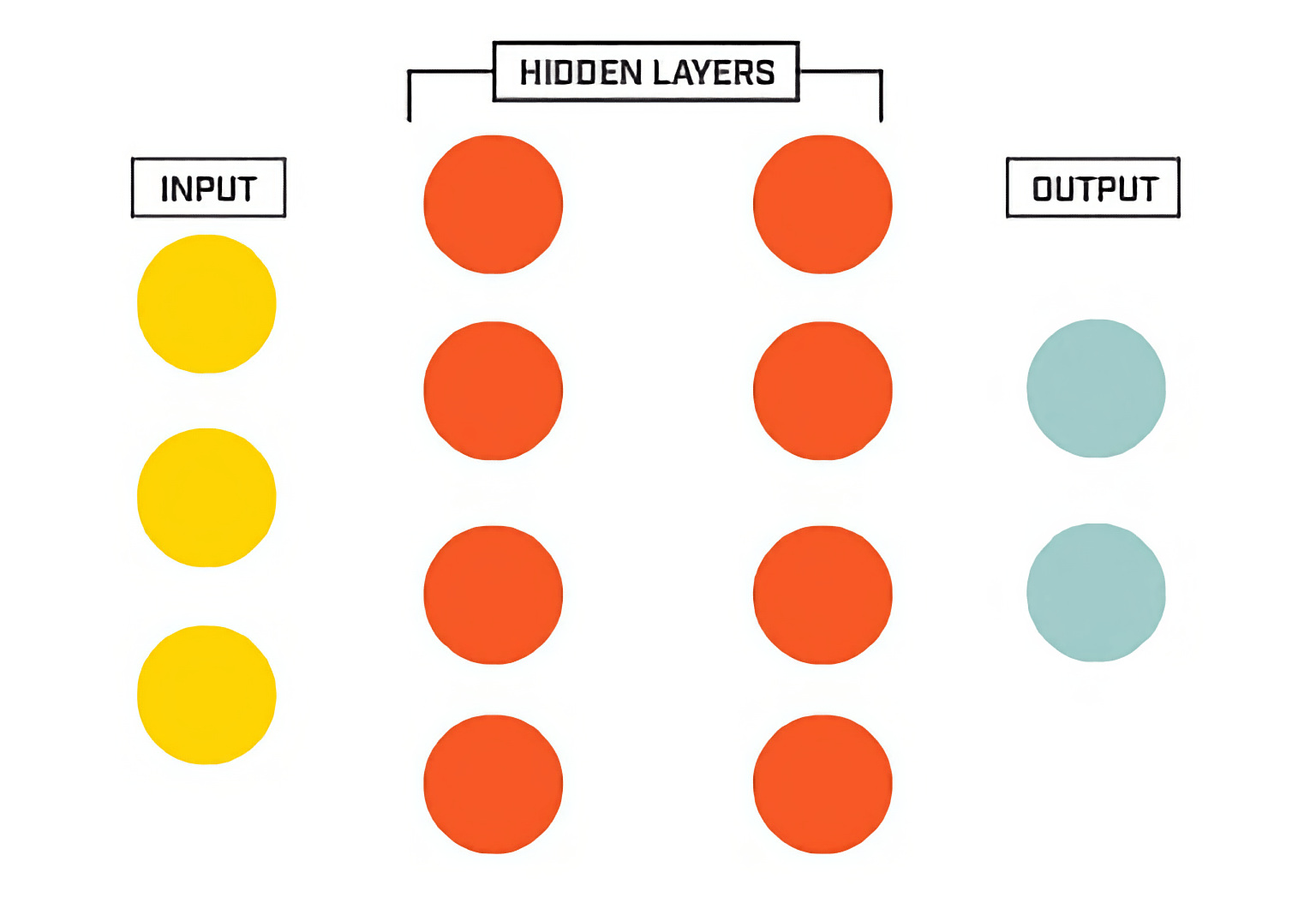

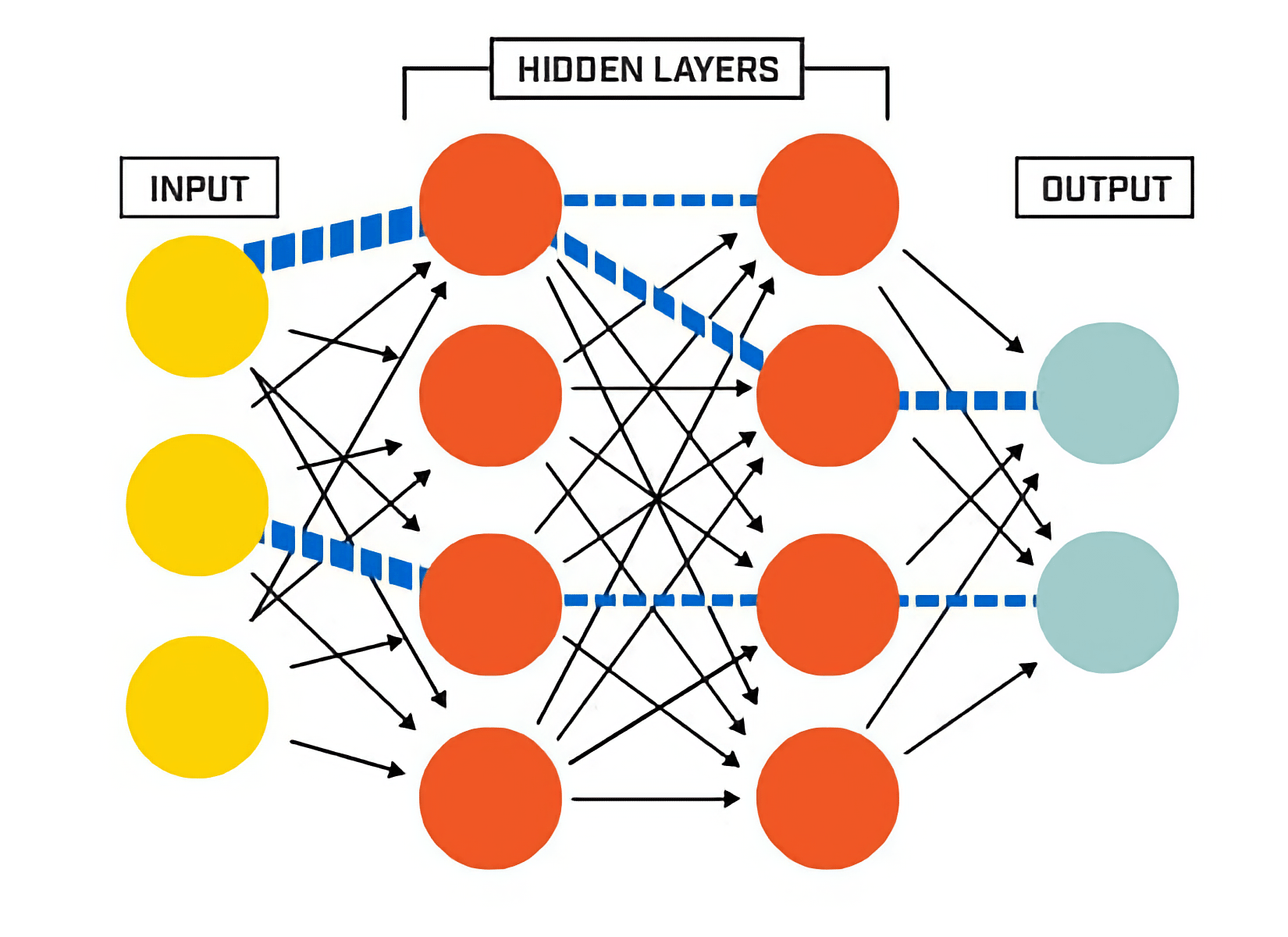

Deep learning using artificial neural networks

Deep learning represents the antithesis of expert systems and symbolic AI.

Whereas humans write expert systems using strings with conscious and subconscious real-world meanings, deep learning allows associations and characteristics to emerge.

These associations are is possible because deep learning uses artificial neural networks (sets of nodes in a series of layers). Basically, deep learning is nodes, layers and connections between these.

The following images depict the basics of deep learning processes.

Deep learning, unlike expert systems, is subsymbolic AI. The question "do heads contain eyes" has an answer: yes. And the question "how many eyes do heads contain" has an answer: usually 2. However, whereas an expert system could only answer these questions if the answers were given to it by a human, a subsymbolic AI could answer them despite never being told the actual answers (depending on its training data).

For example, if the training data was a series of photos of humans and animals with species, heads, and eyes labeled, then an AI could answer questions like: what species is in this photo? And, for this species, how many eyes do heads usually contain?

Deep learning in action: AlphaGo

AlphaGo is a good example of deep learning AI's opacity.

The board game Go had long been thought beyond the reach of AI. Its notoriously complex. Even as recently as 2014, Go players and AI researchers alike considered an AI beating a top human Go player impossible, and then AlphaGo beat the world's top Go players 4-1 and 3-0.

In a post game interview, Ke Jie said "last year, I think the way AlphaGo played was pretty close to human beings, but today I think it plays like the God of Go."

AlphaGo did not play Go by thinking-through every possible move, nor by copying previous games, AlphaGo learned Go. The neural network developed a strong understanding the game.

Machine learning is a bedrock of modern AI research and development, with many of the more advanced versions of other types of AI.

Type #3: Natural-language processing

Natural-language processing (NLP) is an area of AI concerned with the interactions between computers and human (natural) languages.

NLP separates into 2 sub-categories: natural-language understanding and natural-language generation, and is closely related to automated speech recognition (ASR), which is another category of AI.

NLP is the cornerstone of chatbots (which are all the rage) and virtual assistants (such as Siri).

In Science Fiction books and movies, AI system nearly always have NLP technologies.

Type #4: Computer vision

Computer vision is to images as Natural-language processing (NLP) is to words.

Computer vision is an interdisciplinary field concerning how computers can see and understand digital images and videos.

There's no better example of computer vision than Facebook's facial recognition AI, which is now human-like in recognising faces. DeepFace (Facebook's deep learning AI specialising in computer vision) has 97.25% accuracy, regardless of lighting conditions or angles.

Facebook has a big edge in the computer vision game because (in an age of machine learning) data is digital gold. They are now training deep learning models using 3.5 billion Instagram photos and 17,000 hashtags.

Type #5: Automated speech recognition

Automated speech recognition (ASR) and Natural Language Processing (NLP) are linked, but unique categories of AI..

Imagine you are late for a meeting and you need to send a message to your friend, so you open your phone, click Dictation, say "Hi John, I'm on my way" and click send.

In this scenario, does your phone need to know "Hi John" is a greeting? Does your phone need to know "I'm on my way" means you're moving from one location to another location? No. All your phone needs to know is the sounds you made correspond with particular words.

Another break between ASR and NLP is demonstrated by research by scientists from University of California, Berkeley, who trained an AI to attack speech-to-text systems.

The AI could even hide speech altogether!

By starting with an arbitrary waveform instead of speech (such as music), we can embed speech into audio that should not be recognized as speech; and by choosing silence as the target, we can hide audio from a speech-to-text system. - University of California, Berkeley

The AI did not attack NLP by speaking in codes (which could be a method of defeating NLP AIs), but instead attacked its ASR AI by preventing the words being said from being heard.

In a nutshell, whereas NLP is concerned with the meaning of words, and computer vision is concerned with recognising images and videos, ASR is concerned with the meaning of sounds.

Machine learning is common in all three of these domains.

Given any audio waveform, we can produce another that is over 99.9% similar, but transcribes as any phrase we choose (at a rate of up to 50 characters per second). - University of California, Berkeley

Type #6: AI Planning

Automated planning and scheduling (AI Planning) is a branch of AI concerning strategies and action sequences.

Self-driving cars and other autonomous robots need AI Planning to operate.

To get a good sense of what's involved in AI planning, watch this video from BostonDynamics.

{% include youtubePlayer.html id="rVlhMGQgDkY" %}

BostonDynamic's Atlas is designed to operate outdoors and inside buildings. You can see Atlas open doors, walk on uneven ground, cross snow-covered terrain, and stack boxes in a warehouse. A couple of minutes in, there's even a scene where a human intentionally attempts to prevent Atlas from stacking boxes by knocking them out of its hands.

Like other types of AI, machine learning plays a big role in advanced AI Planning, which, unlike classical control and classification problems, requires complex solutions that must be discovered and optimized in multidimensional space.

What are the 6 technologies behind AI?

In this article, we explored AI in terms of the technologies powering AI systems.

The main categories of these are:

- Expert systems

- Machine learning

- Natural language processing

- Computer vision

- Automated speech recognition

- AI Planning

In some ways, expert systems represent more simple AI, with machine learning enabled AI systems represent more exciting advances in AI technology.

Machine learning is commonly used to enable other categories of AI, such as Natural Language Processing (meaning of words), computer vision (meaning of images and videos), automated speech recognition (meaning of sounds) and AI Planning (complex action sequences).

The AI landscape can sometimes appear opaque and confusing, one thing is clear, however, we are heading towards a AI-powered future, with bots doing more things in more areas of work and leisure.

Discover More

Meet BotBot from CodeBots

Starting a new bot should not mean inventing structure or accumulating technical debt on day one. BotBot scaffolds a best-practice repository, evolves it as standards improve, and makes your bots agent-ready so teams can build with confidence from the first commit.

Introducing the CodeBots V2 Developer API: Built for Enterprise-Grade Integrations

A contract-first API we're genuinely proud of: consistent patterns across bots, models, pipelines, repositories, and Marketplace, with version-aware workflows, exports, and validation designed for enterprise-grade integrations.

Meet HelloWorldBot from CodeBots

HelloWorldBot provides a hands-on walkthrough of how CodeBots can turn structured thinking into real outputs. Install it from the Marketplace, follow a small example, and watch the platform capture information, automate steps, and produce versioned artefacts you can keep. It’s the simplest way to see the engine running.