History of artificial intelligence

A fun and informative article on the history of AI.

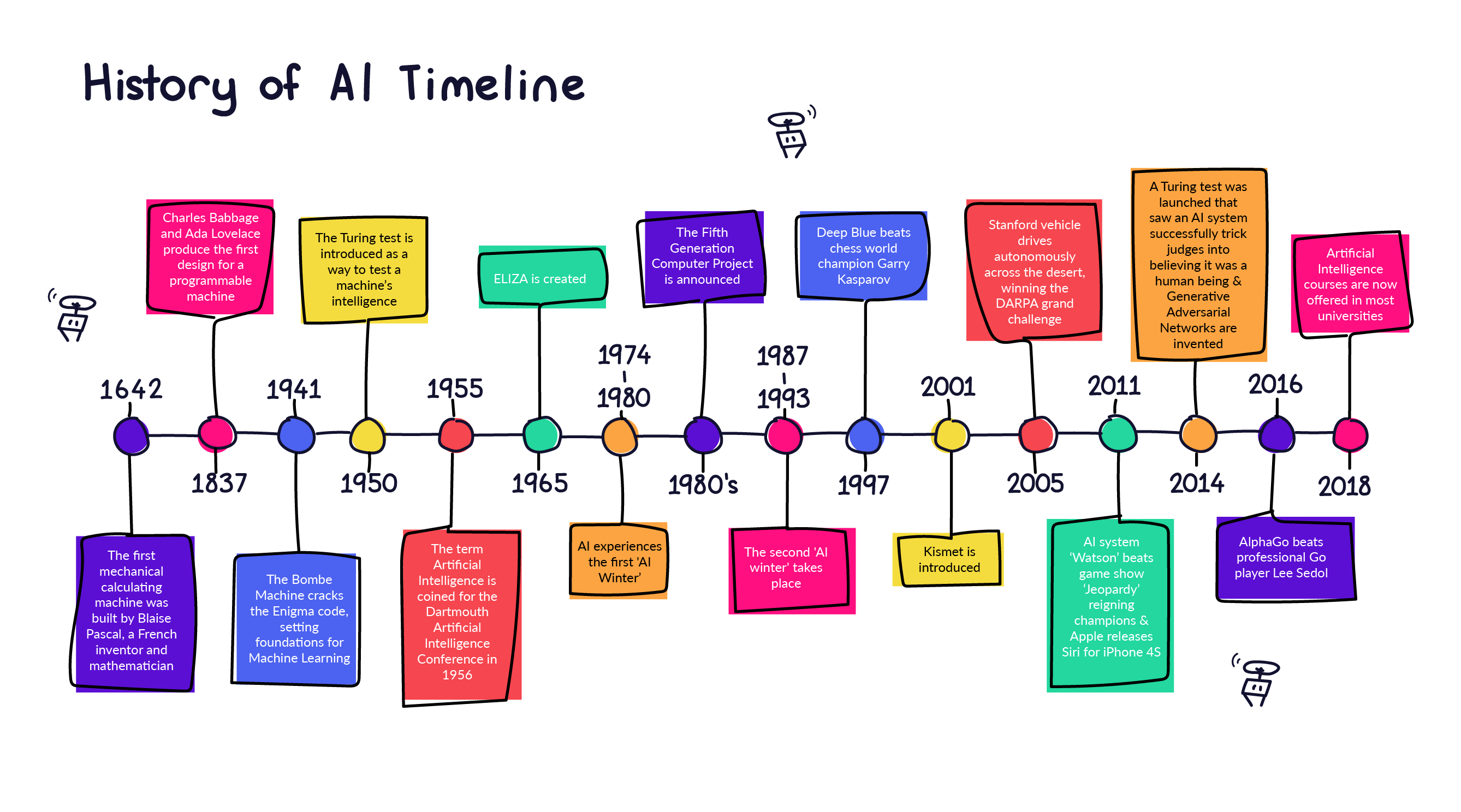

The concept of AI, or an 'artificially intelligent' being, can be traced back to long before the first true computer. In the oldest known work of human literature, the Epic of Gilgamesh, a major recurring character was Enkidu - an artificial human crafted by the gods as a rival for the protagonist. This idea occurred again in Jewish folklore with the Golem - anthropomorphic beings crafted from mud and clay to resemble a human being but lacking a soul.

So, what exactly is AI?

In our article on the 3 Types of AI, we explain artificial intelligence is a branch of computer science which endeavours to replicate or simulate human intelligence in a machine, so machines can perform tasks which typically require human intelligence. Some programmable functions of AI systems include planning, learning, reasoning, problem solving, and decision making.

We categorise AI technologies in several ways, including its capacity to mimic human characteristics, the technologies enabling human characteristics to be mimicked, real-world applications of these systems, and theories of the mind.

In this article we will discuss the extensive history of artificial intelligence.

The Turing test

Artificial intelligence was an idea explored heavily throughout the 20th Century, seen through the creation of pop culture icons such as the 'Tin Man' from the Wizard of Oz, and the research conducted by scientists and mathematicians in the 1950's, who were familiarising themselves with the overall notion of artificial intelligence.

Around this time, a British computer scientist by the name of Alan Turing was exploring the concept of artificial intelligence and the mathematics behind it. Famously, in World War II he, along with his team, cracked the Enigma code which was used by German forces to send secure messages. They did this through the creation of the Bombe Machine, which laid the foundations for what we know today as machine learning.

Turing's end goal was to create a machine that could interact with humans without their knowledge; resulting in them winning the 'imitation game'. He wrote about this in a paper called Computing Machinery and Intelligence, exploring the notion that if humans can draw on information and reason to solve problems and make decisions, why can't machines? This led him to explore the concept of building intelligent machines, and theories on how to test their intelligence and capabilities of thinking like a human being; a test that would come to be known as the Turing test.

Now, although this concept was promising, Alan Turing hit a roadblock in the actual implementation of building and testing intelligent machines; as computers were largely unsophisticated, unable to store commands, and were also extraordinarily expensive to lease. As the theory of AI was so new, funding was unattainable without a proof of concept and backing from higher profile professionals. This leads us to the year 1955, when the term 'artificial intelligence' was officially coined for the Dartmouth College Artificial Intelligence Conference, held in the summer of 1956.

Proof of concept

Cliff Shaw, Allan Newell, and Herbert Simon were an integral part of initialising the AI proof of concept through their program, Logic Theorist, which was presented at the Dartmouth conference. Logic Theorist, created in 1955-1956, was the first artificial intelligence program, and was specifically engineered to imitate human being problem solving. The conference itself also played an integral part in the study of AI as we know it today, and although the discussion fell short on agreeing to standard field method approaches, everyone agreed artificial intelligence was in fact achievable; paving the way for the next twenty years of research into the concept. Around this time, a computer scientist named John McCarthy, often referred to as the father of AI, developed the programming language LISP, which became an important aspect of machine learning advancement.

Marvin Minsky, a cognitive scientist who hosted the conference along with John McCarthy, also had high hopes for AI, stating in 1967,

"Within a generation... the problem of creating artificial intelligence will substantially be solved."

This statement initially seemed achievable, and from the years 1957 to 1974 the field thrived. In 1965 the natural language program ELIZA was created, which handled dialogue on any topic - a similar concept to chatbots today.

Although AI did see significant growth throughout this time, progress in AI research ground to a halt between 1974 and 1980. A time now known as the first 'AI winter', which saw dramatic drops in government funding and general interest in the field.

The AI winters

The first 'AI Winter' occurred for a very simple reason - computers were just not powerful enough yet to store the information needed to communicate intelligently, Hans Moravec, an MIT doctoral student of John McCarthy stated,

"...computers were still millions of times too weak to exhibit intelligence."

In the 1980's however, AI saw a resurgence as the British began funding the field again to compete with Japanese advancements in AI. These advancements included the Fifth Generation Computer Project (FGCP), as well as developments being made to the tools and techniques in algorithmic design. During this time, major advances were also made in deep learning methods, which essentially authorised computers to use experience to learn. However, this reignition of the field was short-lived, and in 1987 to 1993 the second AI winter took place due to lack of funding and the market collapse of early model, general purpose computers. This lull eventually ended in the late 90's, with a shift that changed human and computer relationships forever.

Robots vs. Humans

In the late 90's, Artificial intelligence saw a drastic shift in interest, specifically in the year 1997 when IBM's Deep Blue chess computer became the first computer to defeat the reigning world chess champion, Garry Kasparov. This defeat jumpstarted a new era of AI and human relationships, and the idea of 'bot/human' competition made headlines again in 2011 when the AI system known as 'Watson' went on to compete against and beat the quiz show Jeopardy reigning champions Ken Jennings and Brad Rutter. The year 2011 also saw the the introduction of the Apple intelligent personal assistant 'Siri' on the iPhone 4S.

In 2001, just after the Deep Blue competition, another large leap in the field was made with the introduction of Kismet, a robot which was built to interact with humans by recognising and displaying emotions through facial expressions. Throughout the early 2000's, more and more pop culture references to self-aware androids were popping up, and in 2005 history was made when the Stanford vehicle 'Stanley' drove autonomously across the desert for 211 km, winning the DARPA grand challenge.

In 2014, the Turing test was beaten by an AI chatbot which successfully tricked a panel of judges, convincing them it was in fact a real human communicating and not a computer. This was not without controversy however, with many AI experts commenting the win was due to the bot being able to dodge certain questions by claiming it was an adolescent that spoke English as a second language. It is important to note, since this test was taken, many experts voiced concerns the Turing test was not a good measure of AI, due to it only being able to look at external behaviour, and researchers called for a new Turing test to be developed in a 2015 workshop.

Another significant advance in AI which took place in the 2000's was Ian Goodfellow's 2014 invention of Generative Adversarial Networks (GAN). This is a class of machine learning frameworks which use two neural networks to compete against each other - one, called the 'generator', attempts to fool the second, termed the 'discriminator' by learning from its mistakes to iteratively increase the realism of its generated data, while the discriminator becomes progressively better at distinguishing real data from fake. A popular use of GAN is to generate photo realistic human faces using nothing but neural networks, but they have applications in many other subfields of computer vision as well.

In 2016, the landmark Google DeepMind Challenge Match was held, which saw AlphaGo, a computer Go program, and the 18-time world Go champion, Lee Sedol, compete head-to-head, with the computer winning all but the final match. This was truly notable as Go was widely considered to be one of the domains where humans would unequivocally always beat a computer opponent; this game is often compared to the 1997 chess match between Garry Kasparov and Deep Blue.

These rapid advances were made possible due to a concept known as Moore's Law, which estimates computer memory and speed should roughly double every year. This fundamental increase in processing capacity, combined with other advances, such as the incredible multiprocessing capacity of Graphic Processing Units (GPUs), and the popularisation of widely-distributed systems, have finally allowed the 'practice' of AI to catch up to the 'theory' - allowing computers to match, and in some cases, even exceed human performance.

AI now and in the future

Today, we are seeing constant development in the field of AI as computer systems become more advanced and 'intelligent'. Tech giants such as Google and Amazon, have been utilising machine learning to make constant advancements; processing thousands of terabytes of data on a daily basis to build predictive models of consumer behaviour, while simultaneously devoting immense resources to advancing AI as an academic field - with a particular focus on computer vision and natural language processing. From 2018, most universities have offered undergraduate coursework in artificial intelligence, and enrolments have consistently doubled year-on-year.

Advances in artificial intelligence show no signs of slowing down - the world is changing rapidly, and as we move even further into an era dominated by artificially intelligent technology, the more important the idea of bots and humans working side by side becomes.

Discover More

Introducing the CodeBots V2 Developer API: Built for Enterprise-Grade Integrations

A contract-first API we're genuinely proud of: consistent patterns across bots, models, pipelines, repositories, and Marketplace, with version-aware workflows, exports, and validation designed for enterprise-grade integrations.

Meet HelloWorldBot from CodeBots

HelloWorldBot provides a hands-on walkthrough of how CodeBots can turn structured thinking into real outputs. Install it from the Marketplace, follow a small example, and watch the platform capture information, automate steps, and produce versioned artefacts you can keep. It’s the simplest way to see the engine running.

The Bot Marketplace is Here

The Marketplace has landed in CodeBots Platform 6.0.0, making it easier to discover, install, and reuse bots across teams. It’s a big step toward turning scattered AI experiments into repeatable, governed outcomes.