Why are software estimates so hard?

Besides being a prediction of the future, there is a very human element to why software estimates are so hard. The psychology behind software estimations is fascinating and some studies have turned up some very interesting results. Knowing about your own biases and human psychology can help improve the realism of software estimates. As Dan Ariely sums up nicely with the title of his book, we are Predictably Irrational.

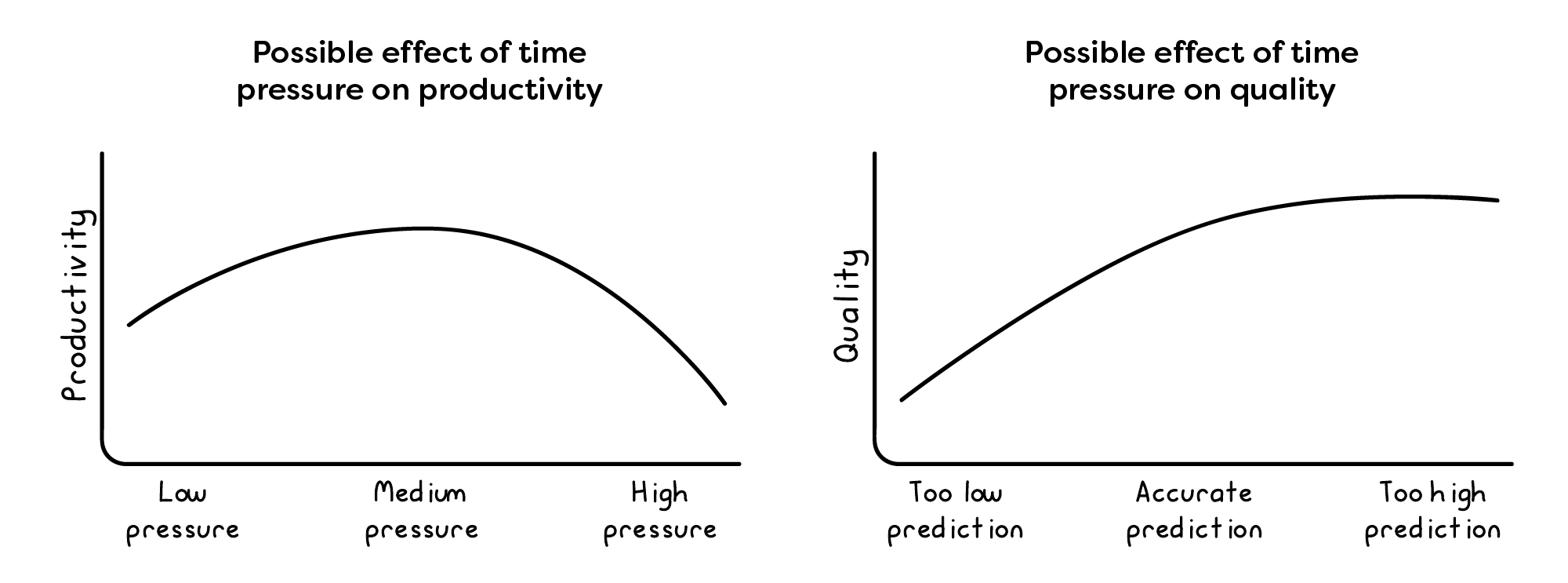

To do better time estimations it is important to understand our biases, so we know what signs to look out for. Unlike predicting the weather, where the prediction has no impact on the outcome, a software estimate does have some impact on the outcome. For example, if the estimate is too low and people have to rush to finish on time, the quality of the outcome can drop off. So, what is the possible effect of time pressure on productivity and quality? Before we dig deeper into this question later, let's get the thought processes working.

As a society, are we rewarding over-optimism and contributing to poor software estimations? Imagine a scenario where there are multiple bidders to win a software development contract, one of the bidders gives an estimation that results in significantly less costs. Since cost reduction is a driver for many organisations, this bidder wins the contract (with a great sales team) but the project ends up blowing out. Who is at fault here?

Is it the bidder who low balled the estimation? Is it the customer who emphasised cost as a key factor? Or is it the wider society and its lack of education on estimations? This is a tough one. The bidder may not have purposefully low balled the estimation, but they were rewarded for doing so. The customer probably wanted a fixed time and fixed scope contract, which is waterfall, but said they wanted something Agile. And the education sector is probably trying to do better to teach undergrads and students how to estimate better, but the youngins don't have the experience yet to grasp the importance of what they are being taught.

Rewarding over optimistic estimations can have a negative effect. As a practitioner, I have experienced this paradox many times in my career and lost a few nights sleep over it. The scenario also happens when people are trying to get approval for projects from their managers. Have you ever put in a bid for a project and felt pressure to bring the costs down? Or have you ever been asked how long something will take and been told that it is too long? I dare say you have. So, the best chance we have at breaking the deadlock is through education and the use of science to help make better decisions.

Magne Jørgensen from Simula Research Laboratory has been working and publishing on the topic of software estimations since 1995. What were you doing in 1995? Me, I was 2 years out of high school studying an undergraduate degree having a great time living the student life. He has been working in this field since then. His recent book, Time Predictions: Understanding and Avoiding Unrealism in Project Planning and Everyday Life, is full of many interesting studies and examples. In this article on why software estimates are so hard, I reference a lot of his work.

{% include youtubePlayer.html id="AIhXGI6i9uA" %}

The reason I really like Jørgensen's book, is that he uses science to bring evidence to support (or not) some industry practices. He is not motivated by selling his brand. His book is free for the kindle edition and you pay for the paperback but I reckon that would be just to cover printing costs. This guy is giving back.

Why are software developers (and designers) so overoptimistic with their estimations?

People generally think they are better than average. Especially when completing relatively simple tasks that they are competent at. The better-than-average effect can be seen in how people rate themselves on many tasks such as driving, grades on exams, and so on. This is a type of overoptimism.

Another example is highlighted by Hamilton who published an empirical analysis of the returns of self-employment in the Journal of Political Economy. It was found that entrepreneurs have a high probability of failure and earn, on average, 35% less than employees in similar jobs after 10 years in business. I laughed out loud when I found this one. As the founder of a tech start up I definitely thought, what have I done.

Jørgensen dedicates a chapter of this book to overoptimistic predictions. That's how important it is to software estimations. Interestingly, he looks into the benefits of overoptimism from an evolutionary point of view, starting back in the stone age. He asks the question, could it be that overoptimism is rational and adaptive? Assuming that there is a small chance of a hunter inventing a new weapon, it would not seem a good decision to waste time (and energy) on this task. But if the hunter was able to innovate, then they could bring themselves and their tribe a distinct advantage in their competitive landscape.

Similarly, for an organisation's ambitious projects, most of them will fail. But if one of them is successful, it could bring them a competitive advantage in the business world. Have you ever been involved in a conversation where someone wants an innovative product but is not willing to risk failure? This is another paradox that some people cannot logically move past so they end up sticking with the status quo.

So, why are software developers (and designers) so overoptimistic with their estimations? It would seem it is innately human and there is some evolutionary past to this. On one hand, it's best to stick with the herd as there is safety in numbers. On the other hand, fortune favours the bold. It seems being overoptimistic runs deep in our DNA and we look at seven time predication biases you must know further below.

What is the possible effect of time pressure on productivity and quality?

Software estimations can have an effect on the outcome of a project. This is important to know as whatever estimation process you use, it will likely influence the outcome of the project. And no doubt you want to setup your project for success.

There seems to be a correlation between a software estimation and the productivity and quality of the outcome. Nan and Harter studied the impact of budget and schedule pressure on software development cycle time and effort and published in the IEEE Transactions on Software Engineering. What they found was the effects of budget and schedule pressure on software cycle time and effort as U-shaped functions. The diagrams can be seen below.

One of our values at CodeBots is; urgency but not rushed. For the possible effect on productivity as seen in the above diagram, it makes sense in my mind that high pressure turns people into rushing and productivity drops off. It looks like the right amount of pressure is a good thing. For the possible effect on quality, if the software estimate is too low (too low a prediction) and the team does not have enough time, quality drops off. But as they have more time to work on the project, the quality goes up. I think we have all felt that.

Can trust have a possible effect on how a software estimate is perceived?

After discussing the possible effect of time pressure on productivity and quality above, it seems that there is a sweet spot where a certain amount of time pressure can end up with a nice trade-off between productivity and quality. So, from the estimators point of view, what is the best way to pitch this to management (or a customer). And from managements point of view, how do you know that the estimators are hitting the sweet spot?

These are interesting questions and I have found that when they are addressed early, the flow on effect seems to have a positive impact on the project. So, can trust (in other people) have a possible effect on how a software estimate is perceived? In an experiment conducted by Jørgensen on the use of precision of software development effort estimates to communicate uncertainty, some very interesting results turned up that could help build trust.

In the experiment, people were asked to look at four developers time estimates on a task. And then they were asked, of the four estimates: Which developer is the most competent? Least competent? Most trustworthy? And least trustworthy? The results of the experiment are shown in the table below.

| Developer | Time prediction | Most competent (%) | Least competent (%) | Most trustworthy (%) | Least trustworthy (%) |

|---|---|---|---|---|---|

| A | 'The work takes 1020 work hours' | 6 | 31 | 7 | 49 |

| B | 'The work takes 1000 work hours' | 11 | 13 | 7 | 14 |

| C | 'The work takes between 900 and 1100 work hours' | 74 | 1 | 70 | 1 |

| D | 'The work takes between 500 and 1500 work hours' | 9 | 55 | 16 | 36 |

The results show that Developer C is perceived as the most competent and most trustworthy. Their estimate uses a range and the gap is not too large. Developer D is the least competent and the lest trustworthy even though their estimation has got the highest probability of being correct. As Jørgensen wrote in his book; with this in mind, it is not surprising, although unfortunate, that many prefer to be precisely wrong rather than roughly right in their time predictions. It also seems from the experiment, that if the time predictions are too accurate, people do not believe them. You can read more about the preciseness paradox in to be convincing or to be right: a question of preciseness

Are fibonacci like scales and t-shirt sizes a good thing?

Using fibonacci-like scales and t-shirt sizes is common practice in Agile software development. A Fibonacci sequence starts at 1 and the next number in the sequence is the addition of the previous two numbers. The first three numbers of the sequence are 1,2,3 (1+2=3), the fourth number is 1,2,3,5 (2+3=5), and so on, So, you end up with the sequence 1, 2, 3, 5, 8, 13, etc. T-shirt sizes is a way to replace the number in the sequence with a different unit.

- Extra small (xs) = 1

- Small (s) = 2

- Medium (m) = 3

- Large (l) = 5

- Extra large (xl) = 8

- Extra extra large (xxl) = 13

- And so on.

So you end up with xs, s, m, l, xl, xxl, etc. The reasoning behind using either a fibonacci scale or t-shirt sizes has a few benefits. Firstly, by having only discreet values to choose, it can help make the estimation process quicker as the estimator can only choose these values and forces a decision using their expert judgement. Secondly, By removing the concept of time the estimation becomes relative. If task A was a Small and task B was a Large, then task C can fit into a Medium. Once a team knows its velocity (how many story points are usually completed in a sprint), they can work out how many stories can be completed based on their relative estimations. But there is a bias that can lead to over optimism and it is important you are aware of it if you use an approach like this.

The central tendency of judgement was published by Hollingworth in 1910 in The Journal of Philosophy, Psychology and Scientific Methods. The central tendency of judgement tells us that we tend to be affected by what is perceived to be the middle of the chosen scale. So, when the scale is not linear (like a fibonacci scale), the middle can skew the results towards over optimistic estimations. So, are fibonacci like scales and t-shirt sizes a good thing? The science is not conclusive on helping us with this question but it is important to be aware of the central tendency of judgement.

7 time prediction biases you must know

These 7 time prediction biases are straight out of the book, literally. In chapter 3 of Time Predictions Understanding and Avoiding Unrealism in Project Planning and Everyday Life, they are listed and everyone is intriguing. In my career as a software engineer, I had experienced each of these and to read them listed out like this in order, made it an enjoying read indeed. I have listed the take home messages from Jørgensen here (with his permission), but go read the book as there are so many nuggets of information that simply cannot be summarised here.

1. The Team Scaling Fallacy

A workforce that is twice as large tends to deliver less than twice as much output per time unit due to an increase in the need for coordination. When predicting time usage for projects with many people, one usually needs to include a larger proportion of work on project management and administration and assume lower productivity than in smaller projects.

2. Anchoring

Anchoring effects in time prediction contexts are typically strong. The only safe method for avoiding anchoring effects is to avoid being exposed to information that can act as a time prediction anchor.

Anchors come in many shapes and disguises, such as budgets, time usage expectations, words associated with complex or simple tasks, early deadlines, and even completely irrelevant numbers brought to your attention before predicting time usage.

3. Sequence Effects

Your previous time prediction will typically influence your next time prediction. Predictions are biased towards previous predictions, meaning that predicting the time of a medium task after a small task tends to make the time prediction too low and predicting the time of a medium task after a large task tends to make the time prediction too high.

4. Format Effects

When the timeframe is short and a large amount of work must be done, the inverted request format, 'How much do you think you can do in X hours?', tends to lead to more overoptimistic time predictions.

5. The Magnitude Effect

Larger projects have been frequently reported to suffer from greater underestimation than smaller projects (magnitude bias) but, with observational data (as opposed to controlled experiments), this association between task size and prediction bias may be due to statistical artefacts.

Controlled experiments, avoiding the statistical problems of observational studies, suggest that a magnitude bias actually exists, at least for predictions of relatively small tasks.

Predicting that the time usage is close to the average of similar tasks will result in a magnitude bias but may also increase the accuracy of the predictions in situations with high uncertainty.

6. Length of Task Description

Longer task descriptions tend to increase the time predictions, but the effect may be weak for important real-world time predictions by people with relevant experience.

7. The Time Unit Effect

The selection and use of units in time predictions matters. Coarser-granularity units tend to lead to higher time predictions. In a context where overoptimistic time predictions are typical, it is important to avoid predicting time in finer granularity time units, such as work hours for tasks that require several person-months.

Take home message 2: The choice of time units when predicting the amount of work to be completed in a given time seems to have little or no effect, such as in predicting the amount of work that can be completed in two hours versus 120 minutes.

Summary

With all of the biases and pitfalls of software estimations, it might seem like an impossible task to do good estimations. In my opinion, one of the most important things you need to know is that we are predictably irrational. It is possible to create a software estimation process that works if you are aware. There are plenty of great books (like the one from Magne Jørgensen) that have a balanced view of our predication biases and habits. In this article, we dove a little deeper into the following four questions:

- Why are software developers (and designers) so overoptimistic with their estimations?

- What is the possible effect of time pressure on productivity and quality?

- Can trust have a possible effect on how a software estimate is perceived?

- Are fibonacci like scales and t-shirt sizes a good thing?

By researching and looking at some science, we were able to get a glimpse into the psychology behind software estimations. We now know that humans can be overoptimistic. We also know that there is a sweet spot on time pressure for productivity and quality. And if we can get out estimations on the sweet spot, it can have a good influence on the project. We also now know that how we present the estimations can build trust, which also can have a flow on effect on the project. And lastly, we are aware of the central tendency of judgement when using fibonacci like scales and t-shirt sizes. Actually, there are many things to look out for like the 7 time prediction biases you must know.

With a grounding in some theory of why software estimates are so hard, it is now possible for you to continue your own personal journey on doing software estimations better.

Discover More

Meet BotBot from CodeBots

Starting a new bot should not mean inventing structure or accumulating technical debt on day one. BotBot scaffolds a best-practice repository, evolves it as standards improve, and makes your bots agent-ready so teams can build with confidence from the first commit.

Introducing the CodeBots V2 Developer API: Built for Enterprise-Grade Integrations

A contract-first API we're genuinely proud of: consistent patterns across bots, models, pipelines, repositories, and Marketplace, with version-aware workflows, exports, and validation designed for enterprise-grade integrations.

Meet HelloWorldBot from CodeBots

HelloWorldBot provides a hands-on walkthrough of how CodeBots can turn structured thinking into real outputs. Install it from the Marketplace, follow a small example, and watch the platform capture information, automate steps, and produce versioned artefacts you can keep. It’s the simplest way to see the engine running.